Ethical vs. Unethical Use Of Artificial Intelligence In Cybersecurity: A Critical Analysis

As the digital world continues to evolve, the role of artificial intelligence (AI) in cybersecurity becomes increasingly crucial. You’ve likely seen references to AI making your devices smarter and protecting your data more effectively. But what draws the line between AI’s ethical and unethical use in cybersecurity?

Using AI to safeguard systems can lead to more effective threat detection and response, creating a safer online environment. However, the same technology can be used unethically, such as for surveillance overreach and data privacy violations, posing serious ethical dilemmas. You need to understand these distinctions to promote responsible and beneficial AI applications in cybersecurity.

Establishing guidelines and best practices becomes paramount amid the ongoing debates. Ensuring that ethical principles are integrated into AI development protects individual rights and strengthens trust in technology. By grasping the challenges and solutions, you can actively contribute to a more secure and ethical cyberspace.

Key Takeaways

- Ethical AI enhances threat detection without compromising privacy.

- Unethical AI practices can lead to surveillance and data misuse.

- Following best practices ensures balanced and fair AI development.

Fundamentals of Artificial Intelligence in Cybersecurity

Artificial Intelligence (AI) significantly influences cybersecurity, addressing various threats and leveraging advanced technologies. Grasping AI’s role in cybersecurity involves understanding its definition, the threat landscape, and key AI applications.

Defining Artificial Intelligence

Artificial Intelligence is the simulation of human intelligence in machines programmed to think and learn. AI can predict, detect, and respond to cybersecurity threats autonomously. These systems utilize algorithms, machine learning, and neural networks to analyze vast amounts of data at high speeds. Key components of AI include:

- Machine Learning (ML): Enables systems to learn from data

- Natural Language Processing (NLP): Helps in understanding human language

- Neural Networks: Mimic human brain functioning

- Deep Learning: A subtype of ML using layers of data processing

Cybersecurity Threat Landscape

The cybersecurity threat landscape constantly evolves, with new and sophisticated attacks emerging regularly. AI addresses several key threats, including:

- Phishing: AI can analyze communication patterns to identify phishing attempts.

- Malware: AI-driven tools can detect and mitigate malware by recognizing unusual behavior.

- Insider Threats: Machine learning models are used to monitor and flag suspicious actions by internal users.

- DDoS Attacks: AI helps identify and mitigate distributed denial-of-service attacks swiftly.

AI Technologies in Cybersecurity

AI technologies optimize cyber defenses through various applications. Notable AI technologies include:

- Predictive Analytics: Forecasts potential threats based on historical data.

- Automated Response: Automatically responds to threats without human intervention.

- Behavioral Analysis: Monitors user activities for anomalies.

- Threat Intelligence: Aggregates data from multiple sources to provide actionable insights.

- Identity Verification: Employs biometric verification to ensure secure access.

Adopting these technologies helps strengthen your cybersecurity framework, providing robust defenses against diverse digital threats.

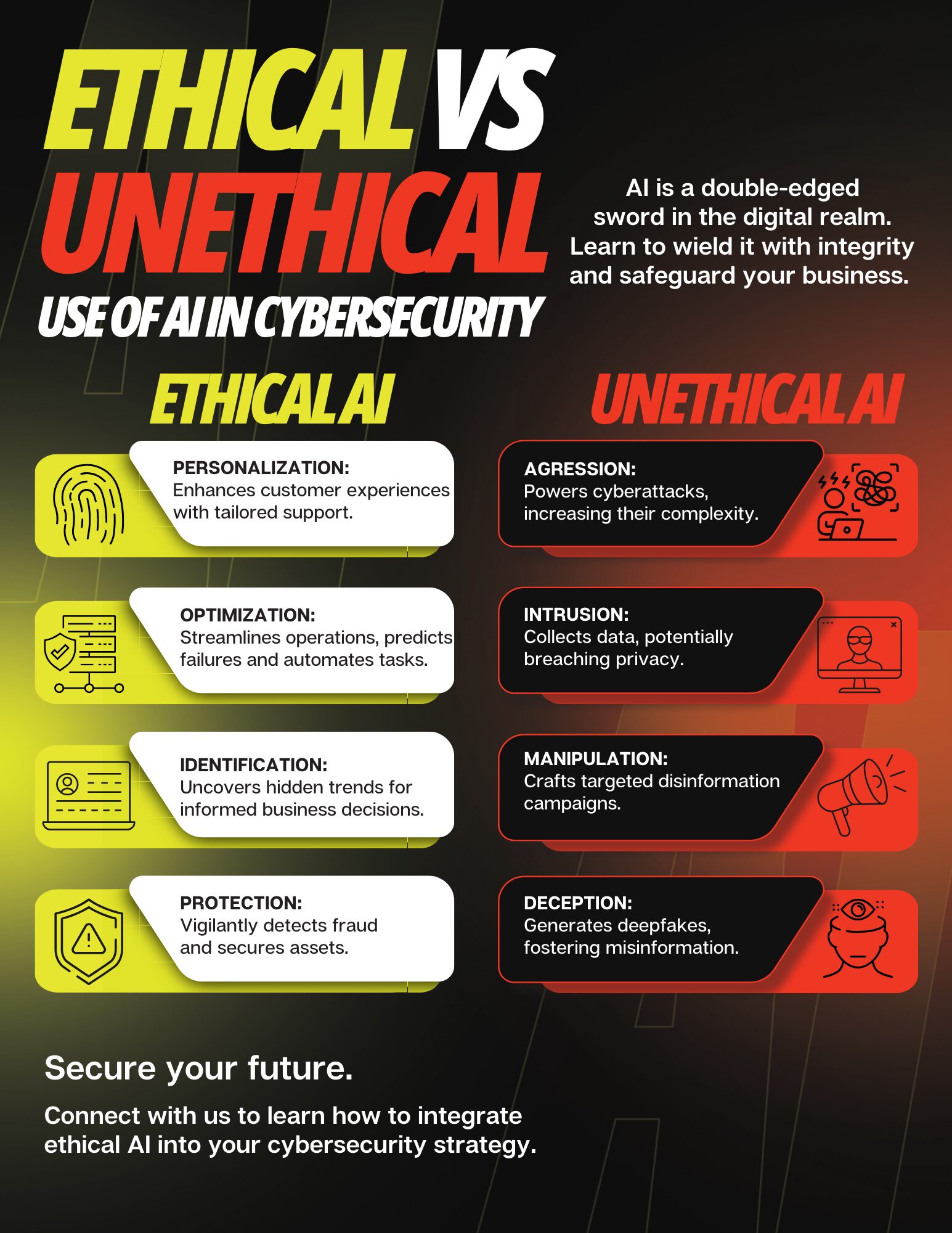

Ethical Use of AI in Cybersecurity

Artificial intelligence (AI) has become crucial in cybersecurity to enhance security, ensure data privacy, and deploy defensive strategies. Its ethical use can protect systems and data while respecting privacy and security norms.

Enhancing Security Measures

AI technology improves overall security measures by detecting threats faster than humans. AI systems use machine learning and predictive analytics to identify potential vulnerabilities before exploiting them. This proactive stance helps safeguard sensitive data and network integrity.

Automated response systems powered by AI can promptly address breaches, minimizing potential damage. Ethical AI deployment prioritizes transparency in its operations, ensuring its actions are understandable and accountable.

Data Privacy and Protection

Ethical AI in cybersecurity emphasizes data privacy. AI tools are designed to comply with data protection laws, such as GDPR and CCPA, ensuring that personal data is handled responsibly. Encryption and anonymization techniques safeguard user data from unauthorized access.

By implementing consent mechanisms, AI systems ensure user data is only collected and used with explicit permission. This respects user privacy and complies with regulations.

AI for Defensive Strategies

Through behavior analysis and anomaly detection, AI helps develop robust defensive strategies against cyber threats. By continuously monitoring network traffic and identifying unusual patterns, AI systems can quickly respond to potential attacks.

Ethical use includes incorporating human oversight to review and validate AI decisions. This ensures that AI actions remain aligned with ethical standards and do not inadvertently cause harm.

Unethical Use of AI in Cybersecurity

Artificial intelligence can be powerful in protecting against cyber threats. Yet, unethical use of AI can cause significant harm, including launching cyber attacks, invading privacy, and perpetuating bias.

AI in Cyber Attacks

AI can be misused to launch sophisticated cyber attacks. Hackers might use AI algorithms to automate and enhance phishing schemes. AI-driven phishing can learn from user behavior to craft highly personalized and convincing phishing emails, making them harder to detect. Malicious AI can also break passwords more effectively by analyzing patterns in human behavior, weakening password security. Furthermore, AI bots can carry out Distributed Denial of Service (DDoS) attacks more efficiently, overwhelming systems with traffic and causing significant disruptions. These uses of AI in cyber attacks highlight the dangers of unethical applications.

Privacy Concerns and Surveillance

Using AI for surveillance raises substantial privacy concerns. Governments and organizations may use AI to monitor individuals, often without consent. AI systems can analyze vast amounts of data, including personal information, to track movements and activities. This constant monitoring can lead to an erosion of privacy rights and civil liberties. Additionally, unethical data collection practices can emerge, where data is gathered and stored without individuals’ knowledge. This data can be exploited, leading to issues like identity theft and misuse of personal information. The invasive nature of AI surveillance is a significant ethical concern, requiring careful consideration and robust regulations to protect privacy.

Bias and Discrimination in AI Systems

AI systems can also perpetuate bias and discrimination. When these systems learn from data that reflects societal biases, they can reinforce and magnify these issues. For instance, an AI used in cybersecurity might unfairly target certain groups based on biased historical data. This can result in discriminatory practices that affect hiring, access to services, or treatment by law enforcement. Additionally, biased AI in security settings can misidentify threats, disproportionately targeting specific communities. Algorithmic bias can thus lead to more profound societal inequalities, making it critical to address these ethical issues in AI design and implementation.

Regulations and Best Practices

In cybersecurity, regulations and best practices ensure that artificial intelligence (AI) is used responsibly and ethically. These guidelines help prevent misuse and promote security across various systems.

Legal Frameworks and Compliance

Legal frameworks for AI in cybersecurity play a crucial role in ensuring ethical practices. Various regulations, such as GDPR in Europe and the AI Bill of Rights in the U.S., address data protection, privacy, and responsible AI use. Compliance with these laws is mandatory for organizations using AI technologies. Non-compliance can result in significant penalties and reputational damage. Cybersecurity professionals need to understand and adhere to these laws to secure sensitive data and maintain trust.

Developing Ethical AI Guidelines

Creating ethical AI guidelines involves establishing clear principles and best practices. Companies should form dedicated committees to oversee AI ethics. These committees should:

- Develop a code of conduct for AI use.

- Assess risks related to AI applications.

- Ensure transparency in AI decision-making.

Training and educating staff on ethical considerations is also critical. By promoting ethical standards, organizations can prevent biases and ensure that AI is used responsibly.

International Collaboration on AI Security

International collaboration is necessary to address the global nature of cybersecurity threats. Agencies and organizations across countries must work together to share knowledge and strategies. This collaboration can include:

- Joint research projects on AI security.

- Standardizing regulations across borders.

- Sharing best practices and threat intelligence.

By working together, nations can develop comprehensive approaches to AI security that are effective and harmonized. This cooperation helps prevent widespread breaches and ensures a unified defense against cyber threats.